Here are some thoughts on Cathal Horan's article "When Not to Choose the Best NLP Model." The world...

High-performance Inferencing with Transformer Models on Spark

A tutorial with code using PySpark, Hugging Face, and AWS GPU instances

Are you looking for up to a 100-fold increase in speed and a 50% reduction in costs with Hugging Face or Tensorflow models? By utilizing GPU instances and Spark, we can perform inferencing on anywhere from two to hundreds of GPUs simultaneously, enhancing performance with ease.

Overview

- Setup Driver and Worker instances

- Partitioning data for parallelization

- Inferencing with Transformer Models

- Discussion

Setup Driver and Worker instances

For this tutorial, we will be using DataBricks, and you may sign up for a free account if you still need access to one. DataBricks must connect to a cloud hosting provider like AWS, Google Cloud Platform, or Microsoft Azure to run GPU instances.

This exercise will use the AWS GPU instances of type “g4dn.large.” However, you may still follow these instructions using Google Cloud or Microsoft Azure and select an equivalent GPU instance.

Once you have set up your DataBricks account, log in and create a cluster with the configuration shown below:

Next, create a notebook and attach it to the cluster by selecting it in the dropdown menu:

Now, we are all set to code.

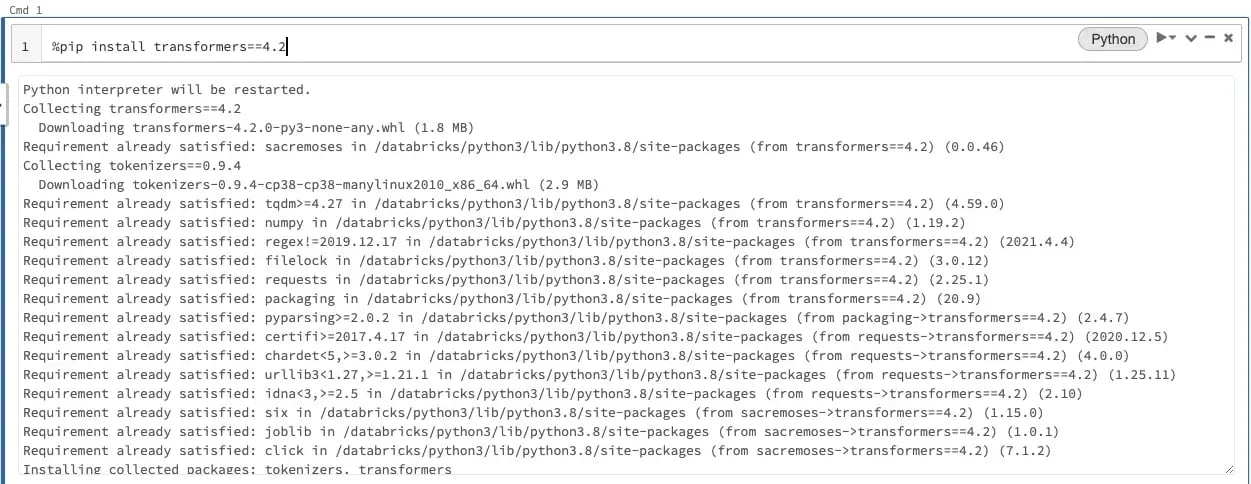

Installing Hugging Face Transformers

Firstly, let us install the Hugging Face Transformers to the cluster.

Run this in the first cell of the notebook:

%pip install transformers==4.2

Hugging Face Transformers Python library installed on the cluster

Libraries installed this way are called Notebook-scoped Python libraries. They are convenient, but they must be run at the start of a session before other code because they reset the Python interpreter.

At this point, we start with actual Python code. In the next cell, run:

Congratulations! Hugging Face Transformers will be successfully installed if the above line runs without errors.

Partitioning data for parallelization

The easiest way to create data that Spark can process in parallel is by creating a Spark DataFrame. For this exercise, a DataFrame with two rows of data will suffice:

We get this DataFrame:

The Transformer model for this exercise takes in two text inputs per row. We name them “title” and “abstract” here.

For the curious, here is an excellent article by Laurent Leturgez that dives into Spark partitioning strategies:

On Spark Performance and partitioning strategies

Inferencing with Transformer Models

We shall use the fantastic Pandas UDF for PySpark to process the Spark DataFrame in memory-efficient partitions. Each partition in the DataFrame is presented to our code as a Pandas DataFrame, which you will see below, is called “df” as a parameter of the function “embed_func.” A Pandas DataFrame makes it convenient to process data in Python.

Code for embed_func():

You might have noticed two things from the code above:

- The code further splits the input text found in the Pandas DataFrame into chunks of 20 as defined by the variable “batch_size.”

- We use Spectre by AllenAI — a pre-trained language model to generate document-level embedding of documents (pre-print here.) We can easily swap this for another Hugging Face model like BERT.

Working around GPU memory limits

When the GPU makes inferences with this Hugging Face Transformer model, the inputs and outputs are stored in memory. GPU memory is limited, especially since a large transformer model requires a lot of memory to store its parameters, leaving comparatively little memory to hold the inputs and outputs.

Hence, we control the memory usage by inferencing just 20 rows at a time. Each time 20 rows are processed, we copy the output from the GPU to a NumPy array, which resides in CPU memory (which is more abundant) with the code at Line 21 with “.cpu().detach().numpy()”.

Finally - Transformer model inferencing on GPU

As mentioned above, this is where the execution of Pandas UDF for PySpark happens. In this case, the Pandas UDF is “embed_func” itself. Read the link above to learn more about this powerful PySpark feature.

The resulting output of this exercise is a DataFrane containing rows of floating-point arrays that represent document embeddings. Spectre creates embeddings that are vectors of 768 floats.

Discussion

I hope you see how Spark, DataBricks, and GPU instances make scaling up inferencing with large transformer models relatively trivial.

The technique shown here allows for running inference on millions of rows and completing it in hours instead of days or weeks. Thus, processing big data sets on large transformer models becomes feasible in more situations.

Cost Savings

But wait, there is more. Despite costing 5 to 20 times a CPU instance, inferencing done by a GPU instance can be more cost-effective because it is 30 to 100 times faster.

Since we pay for the instance on an hourly basis, time is money here.

Less time spent on plumbing

Data is easily imported into DataBricks and saved as Parquet files on AWS S3 buckets or, even better, Data Lake tables (a.k.a. Hive tables on steroids). Then, we can manipulate the data via Spark Dataframes, which, as this article shows, is trivial to parallelize for transformation and inferencing.

All data, code, and computing are accessible and managed in one place “on the cloud.” This neat solution is also intrinsically scalable as the data grows from gigabytes to terabytes, making it more “future-proof.”